How can we ensure that AI is aligned with human values? What can AI teach us about human cognition and creativity?

Dr. Raphael Millière is Assistant Professor in Philosophy of AI at Macquarie University in Sydney, Australia. His research primarily explores the theoretical foundations and inner workings of AI systems based on deep learning, such as large language models. He investigates whether these systems can exhibit human-like cognitive capacities, drawing on theories and methods from cognitive science. He is also interested in how insights from studying AI might shed new light on human cognition. Ultimately, his work aims to advance our understanding of both artificial and natural intelligence.

RAPHAËL MILLIÈRE

One very interesting question is what might we learn from recent developments in AI about how humans learn and process language. The full story is a little bit more complicated because, of course, humans don't learn from the same kind of data as language models. Language models learn from this internet-scraped data that includes New York Times articles, blog posts, thousands of books, a bunch of programming code, and so on. That's very different from the kind of linguistic input that children learn from, which is child-directed speech from parents and relatives that’s much simpler linguistically. That said, there is a lot of interesting research trying to train smaller language models on data that is similar to the kind of input that a child might receive when they are learning language. You can, for example, strap a head mounted camera on a young child's head for a few hours every day and record whatever auditory information the child has access to, which would include any language spoken around or directed at the child. You can then transcribe that, and use that data set to train a language model on the child-directed speech that a real human would have received during their development. So some artificial models are learning from child-directed speech, and that might eventually go some way towards advancing debates about what scientists and philosophers call “nativism versus empiricism” with respect to language acquisition—the nature/nurture debate: Are we born with a universal innate grammar that enables us to learn the rules of language, as linguists like Noam Chomsky have argued, or can we learn language and its grammatical rules just from raw data, just from hearing and being exposed to language as children? If these language models that are trained from this kind of realistic datasets of child-directed speech manage to learn grammar, to learn how to use language in the way children do, then that might put some pressure on the nativist claim that there is this innate component to language learning that is part of our DNA, as opposed to just learning from exposure to language itself.

THE CREATIVE PROCESS

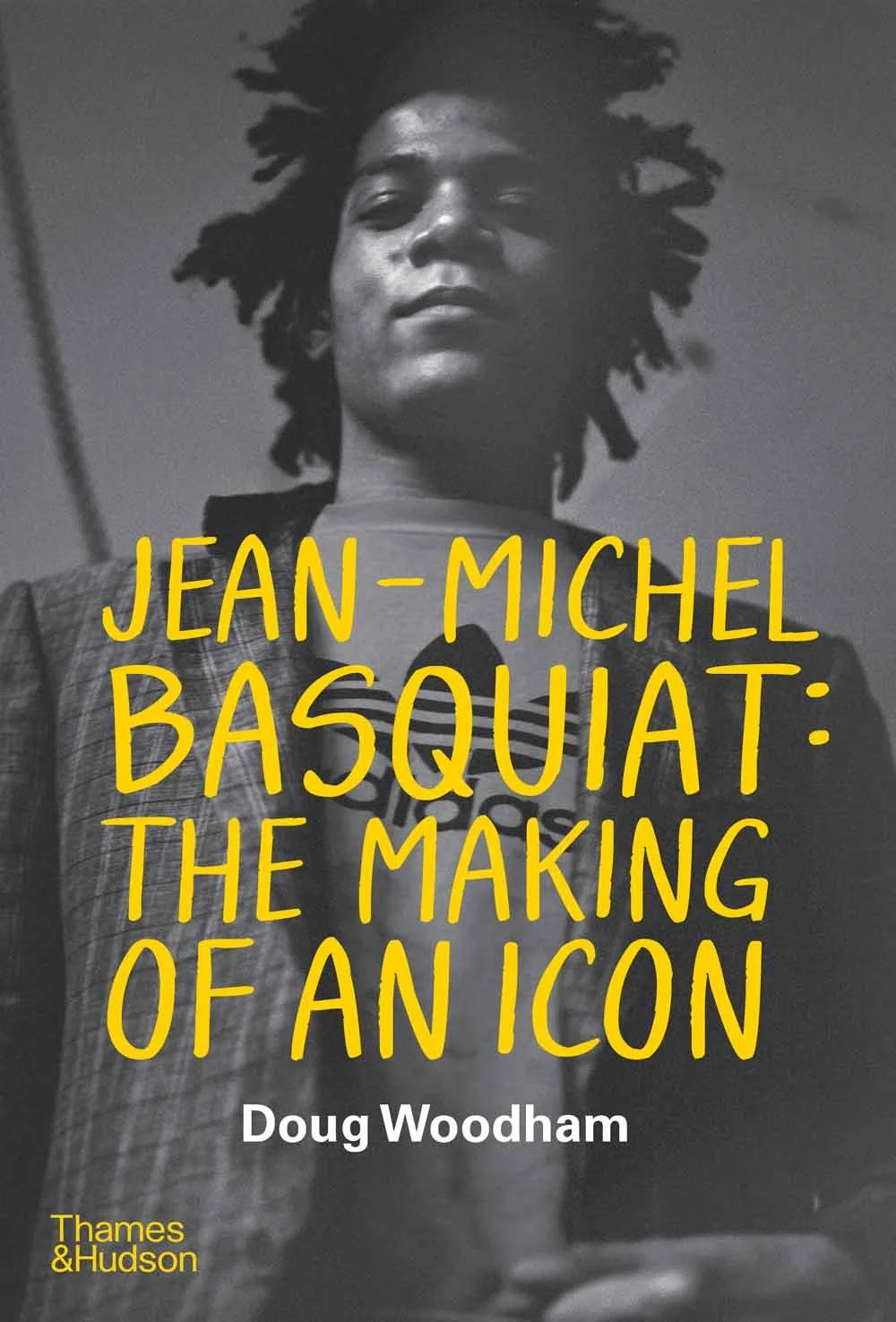

AI is not completely artificial intelligence. At some point down the line, it was human intelligence and creativity that fed these models. How do we value the creators who have become source material for AI systems?

MILLIÈRE

That's a very important and pressing question. What about plagiarism? What about exploitation of the work, vision, and labor of human artists whose outputs become part of the training data of these AI systems? How do we address this problem in a way that doesn't wrong any stakeholder, be it humans, artists, engineers, companies, or society at large? It's a very difficult question. There have been proposals, for example, to remunerate human artists whose artworks were part of the training data of the systems, and also to make sure that there's a way for them to opt out of having their works included in the training of the systems. I think these are definitely proposals that constitute a step in the right direction. If we don't want to make the creation of these AI systems illegal altogether, these are ways to perhaps reconcile technological innovation with fair treatment of human artists.

THE CREATIVE PROCESS

Like a lot of people, I was relieved when the EU AI Act was passed. Finally, governance and guardrails are being put in place. Of course, now it's a question of how we implement those regulations. For those who don't know, they've identified the high-risk areas on local, national, and international levels in a variety of fields from public service management, law enforcement, the financial sector, robotics, autonomous vehicles, the military, national security, and healthcare, where they have established the concept of the human guarantee. In your research, you explore the vulnerabilities of AI systems to be gamed by bad actors, even if there is regulation since we still don't understand exactly how AI works. So, what are your thoughts on the vulnerabilities of those high-risk areas and the kind of pre-training limits that we need to put in place?

MILLIÈRE

Addressing AI’s Potential Dangers

There are different categories of potential harms that might result from AI in general. There are the most dramatic scenarios, the doomsday scenario that some people have been envisioning, with much more advanced AI systems threatening the continued existence of humanity. These are, in my opinion, still far-fetched, if not science fiction scenarios, so I'd like to focus more on the immediate harms that the kinds of AI technologies we have today might pose. With language models, the kind of technology that powers ChatGPT and other chatbots, there are harms that might result from regular use of these systems, and then there are harms that might result from malicious use. Regular use would be how you and I might use ChatGPT and other chatbots to do ordinary things. There is a concern that these systems might reproduce and amplify, for example, racist or sexist biases, or spread misinformation. These systems are known to, as researchers put it, “hallucinate” in some cases, making up facts or false citations. And then there are the harms from malicious use, which might result from some bad actors using the systems for nefarious purposes. That would include disinformation on a mass scale. You could imagine a bad actor using language models to automate the creation of fake news and propaganda to try to manipulate voters, for example. And this takes us into the medium term future, because we're not quite there, but another concern would be language models providing dangerous, potentially illegal information that is not readily available on the internet for anyone to access. As they get better over time, there is a concern that in the wrong hands, these systems might become quite powerful weapons, at least indirectly, and so people have been trying to mitigate these potential harms.

Fighting Monopoly with Open Source Models

We don't want companies to have a monopoly on this kind of discussion. We want to involve presumably everyone in a more democratic process, and regulation has a role to play in that. Currently there is a lot of enthusiasm about open source language models, software whose code is readily available for anyone to to use, modify, or compile on their own computers. Sometimes they're called open source models. Perhaps a better term would be open weights models, because you can download the weights on your computer locally and you can fine tune the model to your own preference. You can modify it to change its behavior in ways that you find useful. That's the potential avenue to resist the monopoly and the kind of monolithic vision of big companies like OpenAI, by having models that everyone can modify and deploy for their own custom purposes. The end result is that people now have some models they can download on their computer that are not quite as good as the best version of Chat GPT so far, but almost there, and they can tinker with that. So we are going to see a lot more custom models in the future.

THE CREATIVE PROCESS

What do you think schools will look like in 10, 15 years?

MILLIÈRE

I don't think universities and schools will be replaced by AI systems any more than, say, YouTube has replaced universities. I think there will always be a place for universities. As a professor and educator, I have a lot of these discussions with my colleagues. I would say it is a bit like the difference between trying to learn a language exclusively through Duolingo and actually learning a language through immersion into a linguistic community. Anyone who is serious about learning languages will tell you that nothing will replace actual immersion, and Duolingo does not compare. It could be useful to learn vocabulary, for example, but it will not replace the other important aspects of learning language. Learning in general can be like that. Having actual immersion in an educational context at the university, and interactions with educators, with a teacher, with a professor, this back-and-forth of writing essays, asking questions, receiving feedback, and being guided by an actual human being, I think people will always value that. But that's not to say that AI will not have an important role to play, and I think that educators will need to adapt and perhaps harness some of these tools for good. For example, one thing I've started doing with some of my students when it's appropriate—I did this when I taught the first class on the philosophy of AI at Columbia University—is to have some students actively use language models to generate arguments for and against a particular claim, and then in class assess the strength of these generated arguments and kind of tear them apart together to see whether we can poke holes in them. That's one way to use AI-related technology not as a substitute for critical thinking, but instead precisely as a way to stimulate the student's critical thinking skills, and to make learning interactive in a way that wouldn't be possible without using these tools.

THE CREATIVE PROCESS

As you reflect on the future, education, the challenges we face, and the kind of world we're leaving for the next generation, what would you like young people to know, preserve and remember?

MILLIÈRE

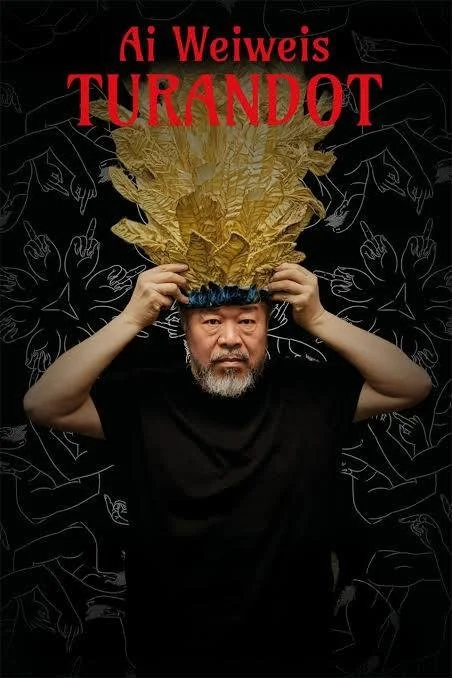

AI as Hope for the Future of Creativity

Before I decided to become a philosopher, I wanted to become a filmmaker, and in my education in France, where I'm originally from, there was a lot of emphasis placed on the humanities and arts. I still have a deep passion for the arts, and when it comes to our future, I am of two minds about the role that AI will play in how we interact with creative fields. Going back to my first love of cinema, there are various young filmmakers who are experimenting with AI systems to generate shots and short videos. They’re using it creatively. I think there is a tremendous potential there to usher in a renaissance of surrealist cinema where we can generate images and shots that could never have been made with a traditional camera, certainly not without a huge budget. Lowering the barrier to entry to artistic creation is something I'm excited about. On the other hand, of course, there are the various issues we've raised about the potential for plagiarism, for exploiting human artists, and also concerns about people gradually losing artistic skills because we have these tools available, and about the homogenization of taste. How is AI going to influence the kind of art and content entertainment we like to consume? How does engagement with AI-generated artifacts change the way we relate to art? I don't have the answer to that question, and I think it could go either way. I think it could come with good and bad. It could homogenize preferences and the little quirks we have in the way we engage with music, video, and literature—but it could also, on the other hand, supercharge creativity. That's perhaps the one thing I'd like to say about the future: I hope these new technologies are used to empower human creativity and human flourishing instead of stifling it.